As an AI researcher and a robotics enthusiast, I always keep my eyes peeled for interesting and groundbreaking developments in the field. One area that has been gaining a lot of attention recently is the quest for General Artificial Intelligence (AGI) in Robotics. I’ve been considering a new approach for a robot that can self-maintain, and I would like to share this with you today.

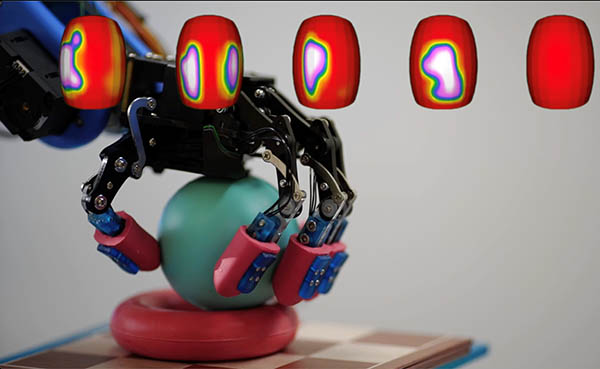

Figure 1: Tactile Awareness Through Sensors

When we talk about self-maintaining robots, we envision machines capable of monitoring their battery levels, heading off to charge when needed, troubleshooting, and even repairing their own parts. This implies a degree of intelligence and autonomy that currently exists only in the realm of science fiction. However, I propose a novel architecture that might just make this a reality.

This architecture consists of three main components: Perception, Cognition, and Action. Let’s delve deeper into these.

Perception

Perception is all about sensing and interpreting the world around the robot. To handle self-maintenance tasks, our robot requires specialized sensing capabilities. It needs to understand the status of its own ‘health,’ such as battery levels and the condition of its parts. We can equip our robot with a combination of hardware sensors and advanced Computer Vision algorithms to make this happen.

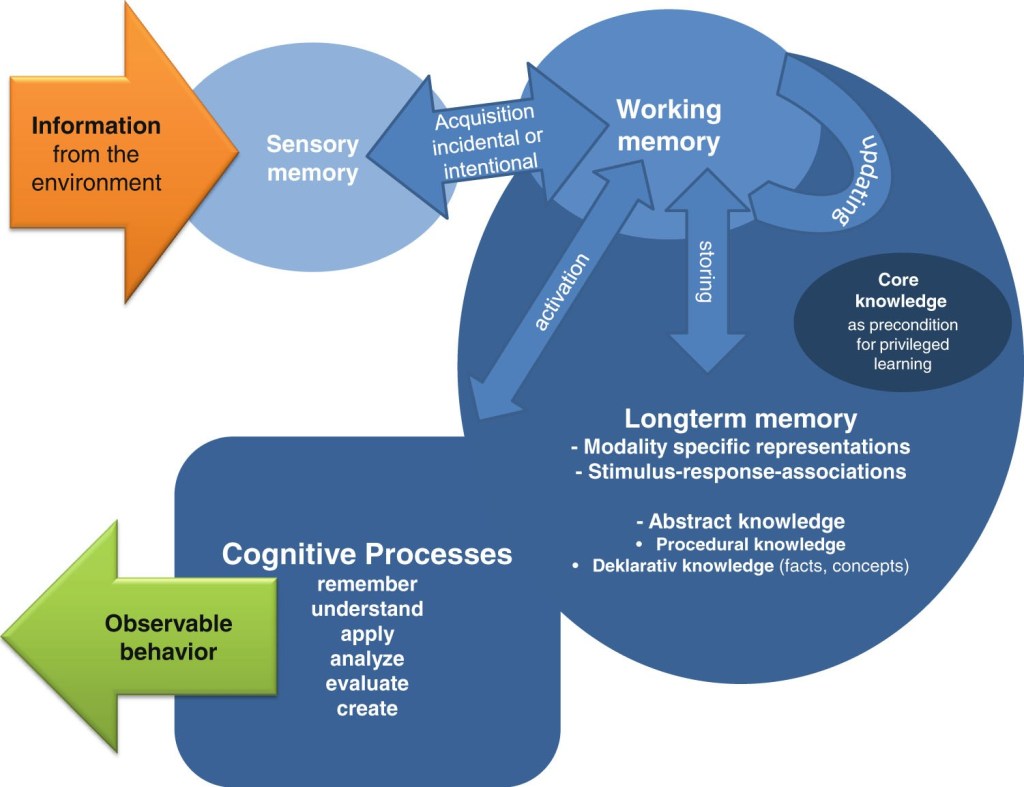

Figure 2: A visual representation of a robotic perception system

Battery Level Sensing: By training a Recurrent Neural Network (RNN) such as LSTM or GRU, we can make our robot understand its past energy usage patterns and predict when its battery will run out. This model can learn from the sensor data about the robot’s energy consumption, creating a robust energy management system.

Fault Detection and Localization: Using a Convolutional Neural Network (CNN), the robot can visually inspect its parts to identify signs of wear and tear. Additionally, this system can also analyze sensory data (like temperature or pressure readings) to identify malfunctioning components.

Cognition

With a thorough perception system in place, we need the robot to make decisions based on this information. This is where the cognition module comes into play. It’s responsible for planning when to charge, how to get to the charging station, and how to repair any identified faults.

Charge Planning: By using reinforcement learning, our robot can learn to take actions that maximize its operational time. Algorithms like Q-learning or Deep Deterministic Policy Gradients (DDPG) can be used to optimize the robot’s energy management strategy.

Path Planning: To find the charging station, our robot needs advanced path planning capabilities. Here, we combine traditional path planning algorithms (like A*) with machine learning models. This hybrid approach allows the robot to adapt to complex real-world scenarios.

Figure 3: Sample pipeline from environment perception to cognitive processes

Action

The Action module is responsible for carrying out the plans made by the Cognition module. It involves controlling the robot’s movements and actions.

Figure 4: Robot taking appropriate repair action from cognition module

Locomotion: Here we can combine classical control theory with machine learning. For example, Proportional-Integral-Derivative (PID) controllers can be used in conjunction with reinforcement learning algorithms to improve the robot’s movements, making them smoother and more energy-efficient.

Repair Actions: Depending on the robot’s design, this could involve tasks like replacing parts, tightening screws, etc. We can train our robot to sequence the steps required to perform a repair using reinforcement learning. Additionally, imitation learning can be used to learn from demonstrations of the repair being performed, significantly enhancing the robot’s repair capabilities.

Pipeline

In terms of a pipeline, the data flows from Perception to Cognition and finally to Action. Each module improves continually based on the feedback it receives. For instance, the Perception module can enhance its fault detection capabilities based on feedback from the Cognition and Action modules about whether the identified faults were real and if the repairs were successful. Similarly, the Cognition module can refine its planning strategies based on whether its plans were executed successfully or not.

While the proposed framework presents a fascinating approach to creating robust, self-maintaining robot systems, there are a few important considerations we must bear in mind.

Building such a system is a highly interdisciplinary endeavor, involving expertise in robotics, computer vision, natural language processing, reinforcement learning, and control theory. The combination of these diverse fields opens up new horizons for innovation but also brings in complexity that needs careful handling.

Furthermore, such a system would require extensive training and tuning along with a substantial amount of high-quality data. This could be a potential bottleneck considering the practical limitations of gathering this data.

Safety, reliability, and ethical considerations also become pivotal when designing such autonomous systems. It’s vital to ensure the system behaves as expected and does not pose risks to its environment or itself.

Figure 5: High level overview of proposed framework

In conclusion, as we edge closer to the dream of creating General AI in Robotics, self-maintenance capabilities stand as a significant milestone. The path towards achieving this is certainly challenging, but with the advancements in AI and robotics, it’s exciting to imagine the possibilities. This could be a giant leap towards autonomous machines, capable of exploring hostile environments like deep seas, deserts, or even other planets, where human intervention might not be feasible.

As we continue to push the boundaries, I look forward to sharing and discussing more exciting developments in the future. Until then, keep exploring, and let your imagination drive your innovation!

Leave a comment